Platform Installation Introduction

Purpose and Scope

This document aims to assist users in installing and initially configuring Apache StreamPark.

Target Audience

Intended for system developers and operators who need to deploy Apache StreamPark in their systems.

System Requirements

Reference: https://streampark.apache.org/docs/user-guide/deployment#environmental-requirements

Hardware Requirements

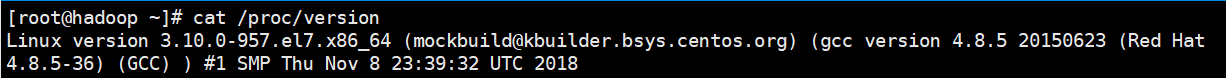

- This document uses Linux: 3.10.0-957.el7.x86_6

Software Requirements

Notes:

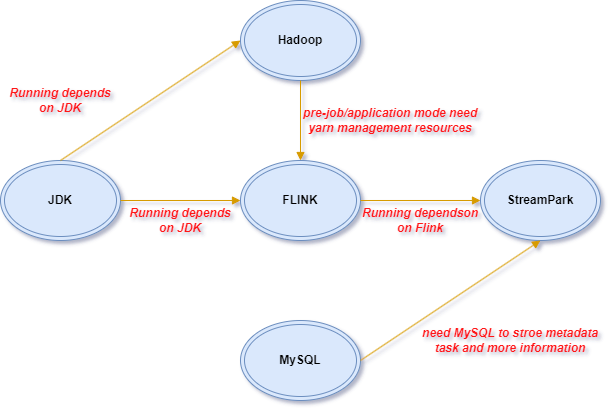

- For installing StreamPark alone, Hadoop can be ignored.

- If using yarn application mode for executing Flink jobs, Hadoop is required.

- JDK : 1.8+

- MySQL : 5.6+

- Flink : 1.12.0+

- Hadoop : 2.7.0+

- StreamPark : 2.0.0+

Software versions used in this document:

- JDK: 1.8.0_181

- MySQL: 5.7.26

- Flink : 1.14.3-scala_2.12

- Hadoop : 3.2.1

Main component dependencies:

Pre-installation Preparation

JDK, MYSQL, HADOOP need to be installed by users themselves.

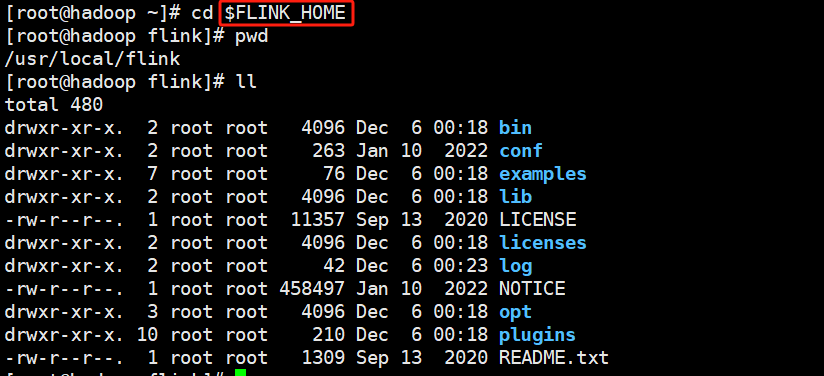

Download & Configure Flink

Download Flink

cd /usr/local

wget https://archive.apache.org/dist/flink/flink-1.14.3/flink-1.14.3-bin-scala_2.12.tgz

Unzip

tar -zxvf flink-1.14.3-bin-scala_2.12.tgz

Rename

mv flink-1.14.3 flink

Configure Flink environment variables

# Set environment variables (vim ~/.bashrc), add the following content

export FLINK_HOME=/usr/local/flink

export PATH=$FLINK_HOME/bin:$PATH

# Apply environment variable configuration

source ~/.bashrc

# Test (If it shows: 'Version: 1.14.3, Commit ID: 98997ea', it means configuration is successful)

flink -v

Introduce MySQL Dependency Package

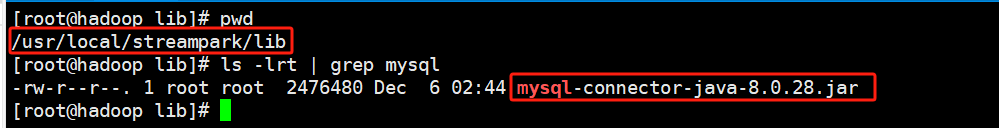

Reason: Due to incompatibility between Apache 2.0 license and Mysql Jdbc driver license, users need to download the driver jar package themselves and place it in $STREAMPARK_HOME/lib, 8.x version recommended. Driver package version: mysql-connector-java-8.0.28.jar

cp mysql-connector-java-8.0.28.jar /usr/local/streampark/lib

Download Apache StreamPark™

Download URL: https://dlcdn.apache.org/incubator/streampark/2.0.0/apache-streampark_2.12-2.0.0-incubating-bin.tar.gz

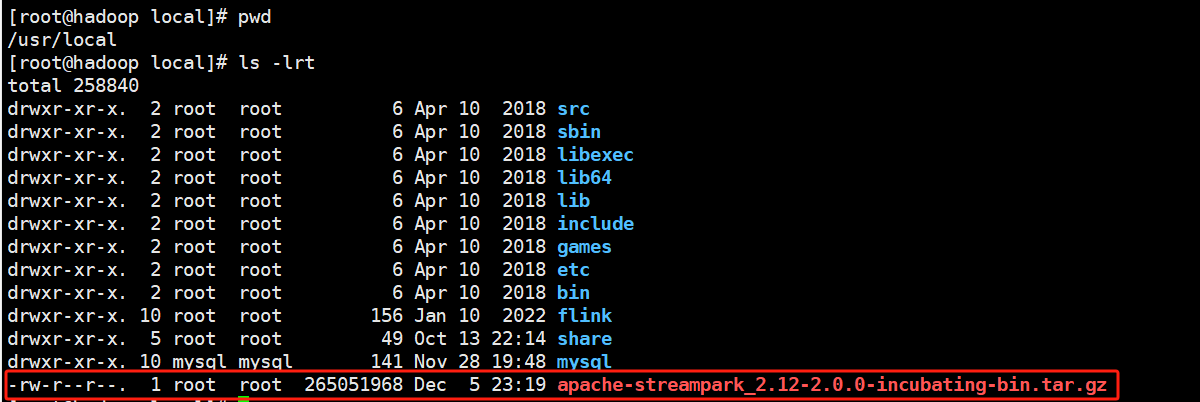

Upload apache-streampark_2.12-2.0.0-incubating-bin.tar.gz to the server /usr/local path

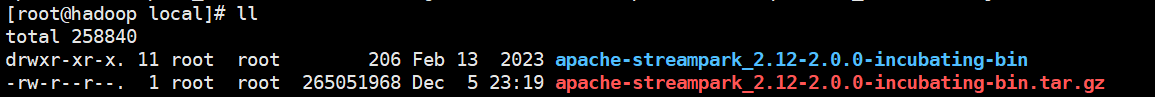

Unzip

tar -zxvf apache-streampark_2.12-2.0.0-incubating-bin.tar.gz

Installation

Initialize System Data

Purpose: Create databases (tables) dependent on StreamPark component deployment, and pre-initialize the data required for its operation (e.g., web page menus, user information), to facilitate subsequent operations.

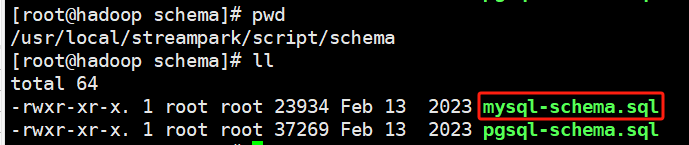

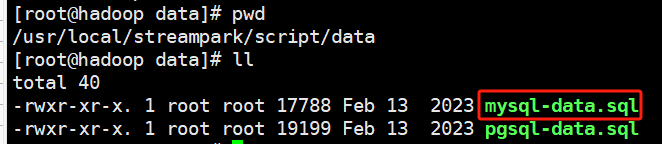

View Execution of SteamPark Metadata SQL File

Explanation:

- StreamPark supports MySQL, PostgreSQL, H2

- This document uses MySQL as an example; the PostgreSQL process is basically the same

Database creation script: /usr/local/apache-st

reampark_2.12-2.0.0-incubating-bin/script/schema/mysql-schema.sql

Database creation script: /usr/local/apache-streampark_2.12-2.0.0-incubating-bin/script/data/mysql-data.sql

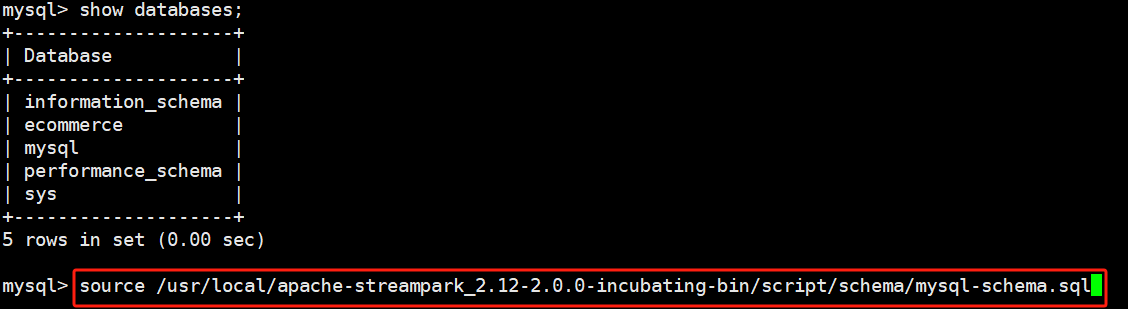

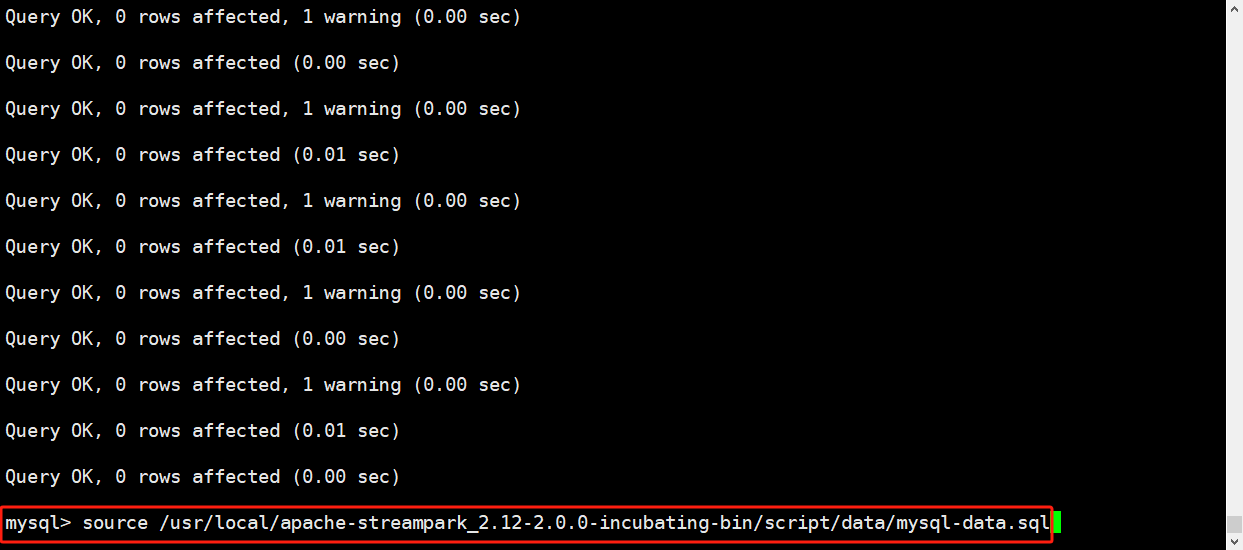

Connect to MySQL Database & Execute Initialization Script

source /usr/local/apache-streampark_2.12-2.0.0-incubating-bin/script/schema/mysql-schema.sql

source source /usr/local/apache-streampark_2.12-2.0.0-incubating-bin/script/data/mysql-data.sql

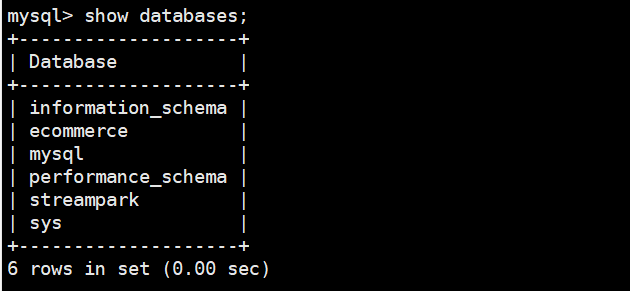

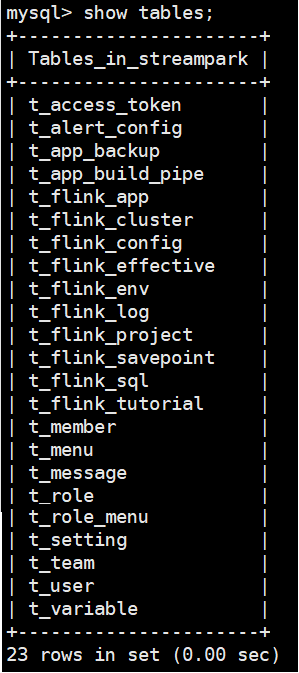

View Execution Results

show databases;

use streampark;

show tables;

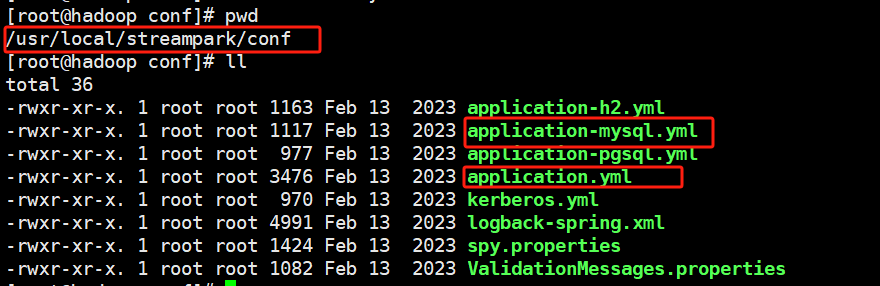

Apache StreamPark™ Configuration

Purpose: Configure the data sources needed for startup. Configuration file location: /usr/local/streampark/conf

Configure MySQL Data Source

vim application-mysql.yml

The database IP/port in username, password, url need to be changed to the user's own environment information

spring:

datasource:

username: Database username

password: Database user password

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://Database IP address:Database port number/streampark?useSSL=false&useUnicode=true&characterEncoding=UTF-8&allowPublicKeyRetrieval=false&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=GMT%2B8

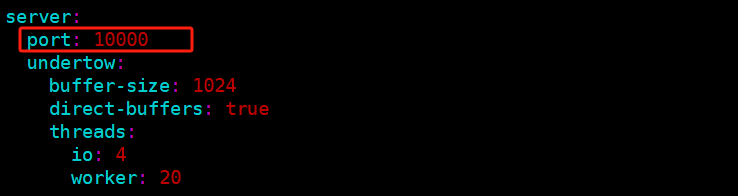

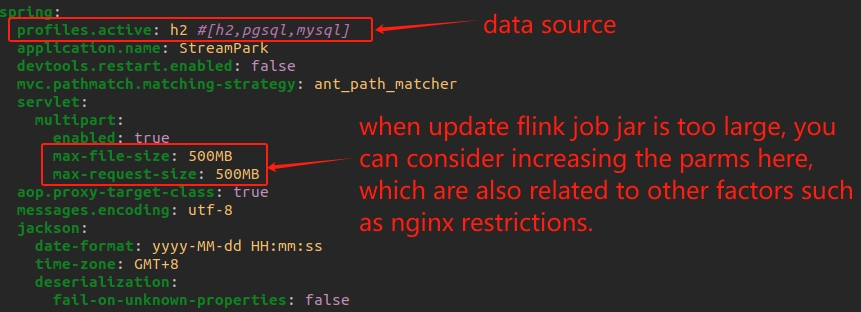

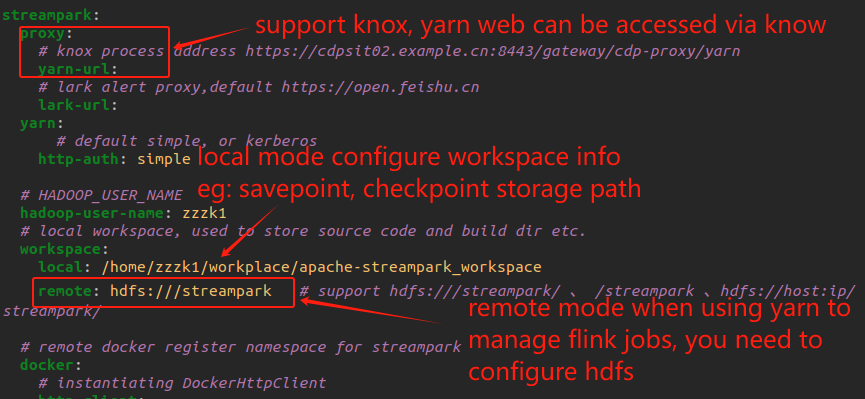

Configure Application Port, HDFS Storage, Application Access Password, etc.

vim application.yml

Key configuration items:

- server.port # 【Important】Default web access port 10000, can be changed if there is a conflict (e.g., hive service)

- knife4j.basic.enable # true means allowing access to Swagger API page

- knife4j.basic.password # Password required for accessing Swagger API page, enhancing interface security

- spring.profiles.activemysql # 【Important】Indicates which data source the system uses, this document uses mysql

- workspace.remote # Configure workspace information

- hadoop-user-name # If using hadoop, this user needs to have permission to operate hdfs, otherwise an “org.apache.hadoop.security.AccessControlException: Permission denied” exception will be reported

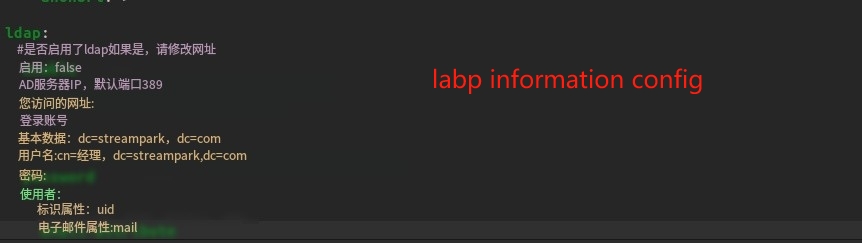

- ldap.password # The system login page offers two login modes: User password and ldap. Here you can configure ldap password

Main configuration example:

If the flink job jar is too large, it may fail to upload, so consider modifying (max-file-size and max-request-size); of course, other factors in the actual environment should be considered: nginx restrictions, etc.

Supports Knox configuration, some users have privately deployed Hadoop environments, accessible through Knox workspace: Configure workspace information (e.g., savepoint and checkpoint storage paths)

ldap

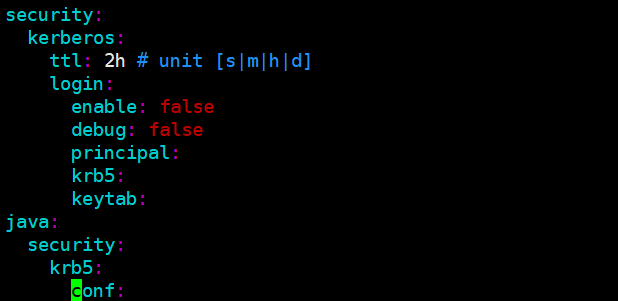

【Optional】Configuring Kerberos

Background: Enterprise-level Hadoop cluster environments have set security access mechanisms, such as Kerberos. StreamPark can also be configured with Kerberos, allowing Flink to authenticate through Kerberos and submit jobs to the Hadoop cluster.

Modifications are as follows:

- security.kerberos.login.enable=true

- security.kerberos.login.principal=Actual principal

- security.kerberos.login.krb5=/etc/krb5.conf

- security.kerberos.login.keytab=Actual keytab file

- java.security.krb5.conf=/etc/krb5.conf

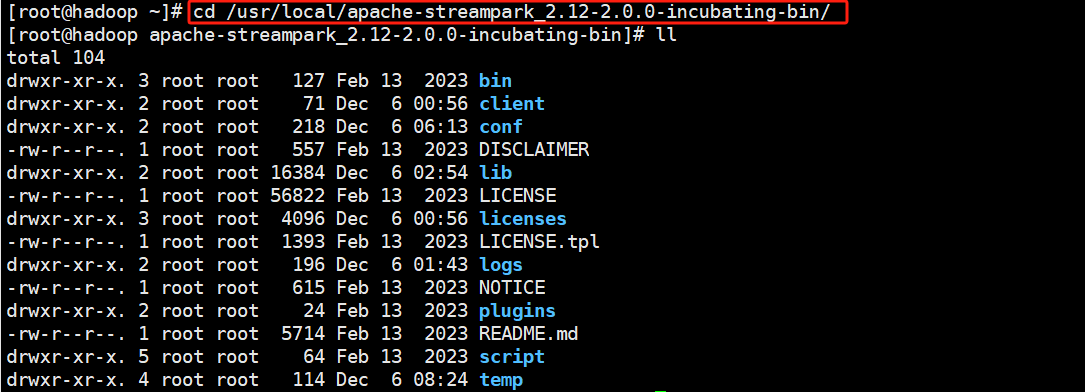

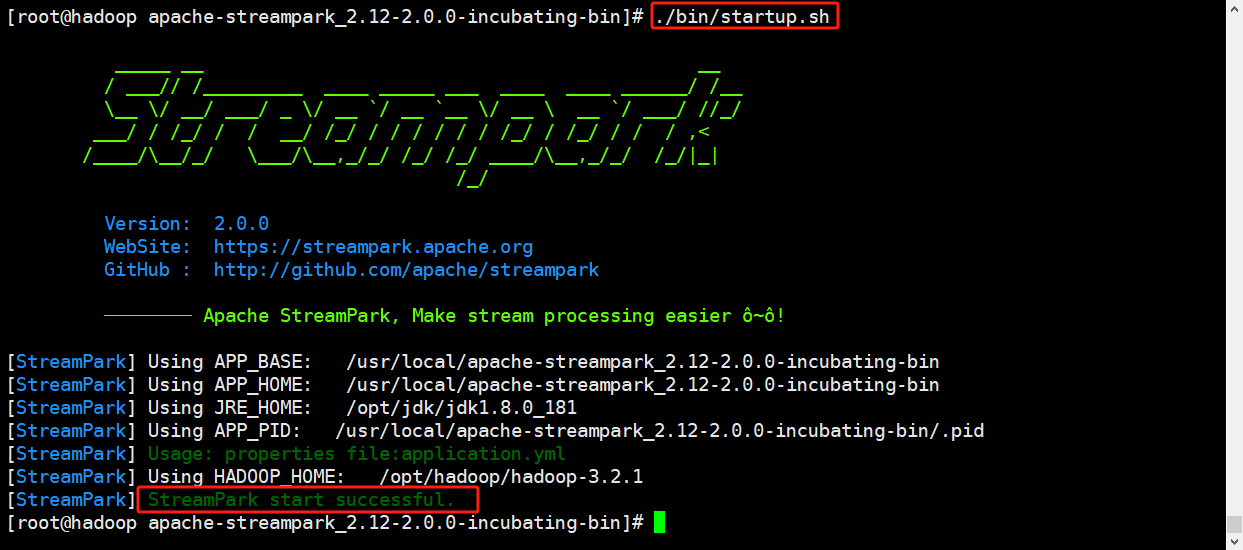

Starting Apache StreamPark™

Enter the Apache StreamPark™ Installation Path on the Server

cd /usr/local/streampark/

Start the Apache StreamPark™ Service

./bin/startup.sh

Check the startup logs Purpose: To confirm there are no error messages

tail -100f log/streampark.out

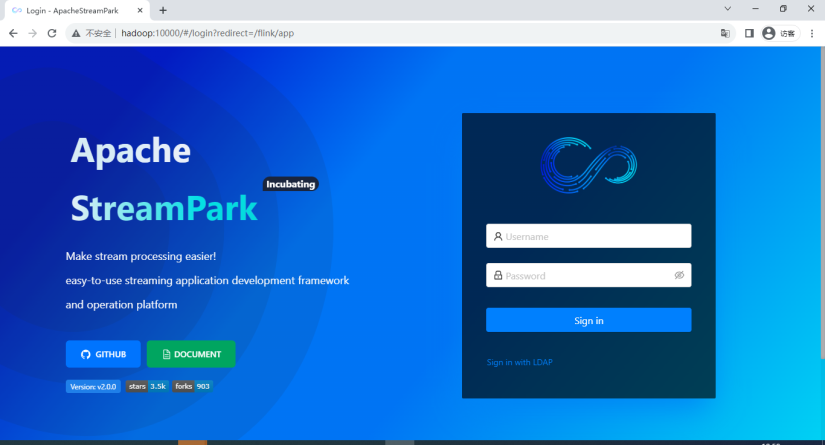

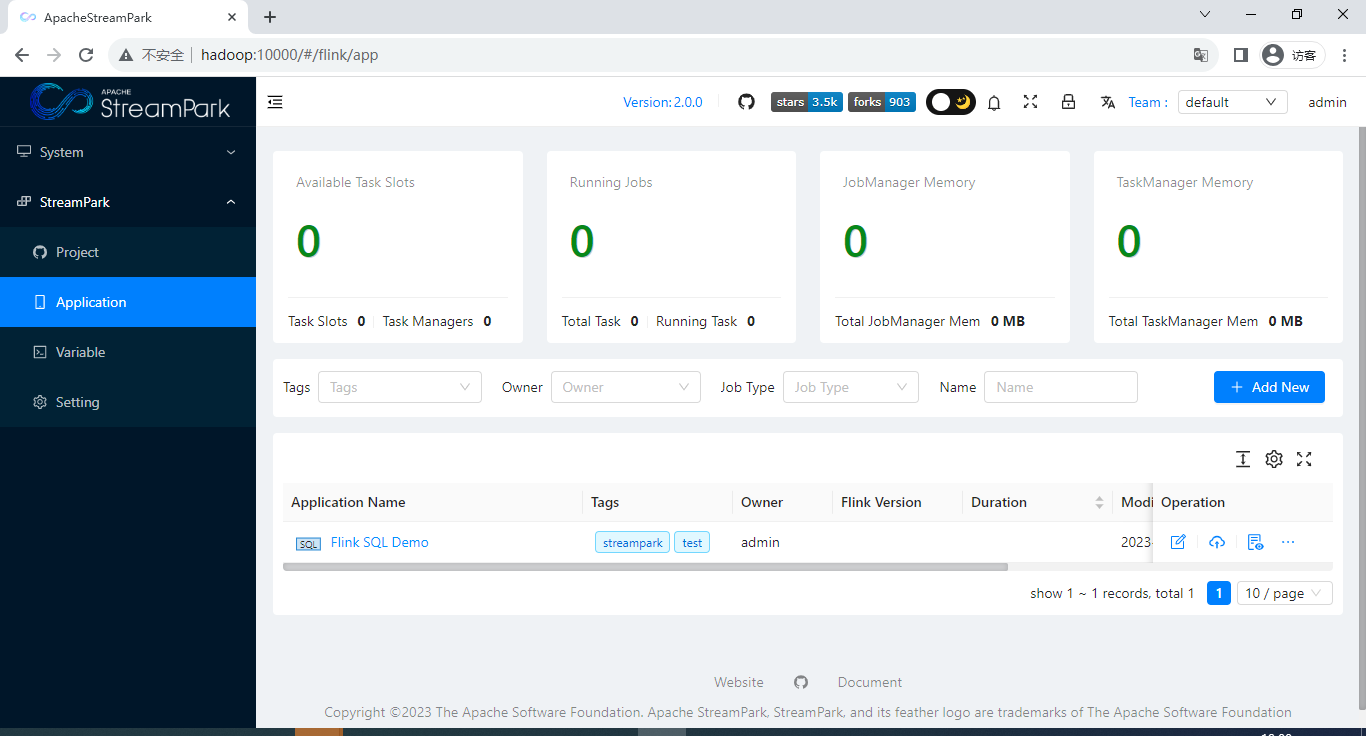

Verifying the Installation

# If the page opens normally, it indicates a successful deployment.

http://Deployed streampark service IP or domain:10000/

admin/streampark

Normal Access to the Page

System Logs in Normally

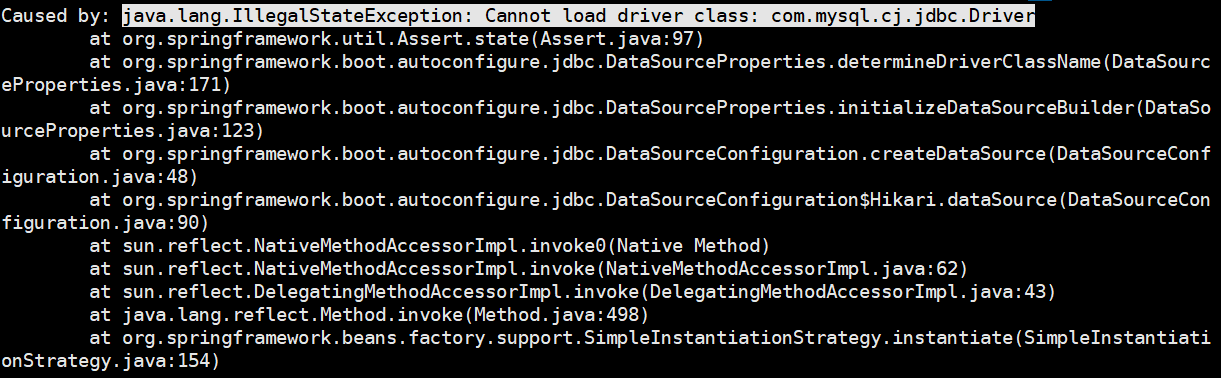

Common Issues

Cannot load driver class: com.mysql.cj.jdbc.Driver

Reason: Missing MySQL driver package, refer to “3.2. Introducing MySQL Dependency Package”